🛠 This portfolio is a work in progress 🧰

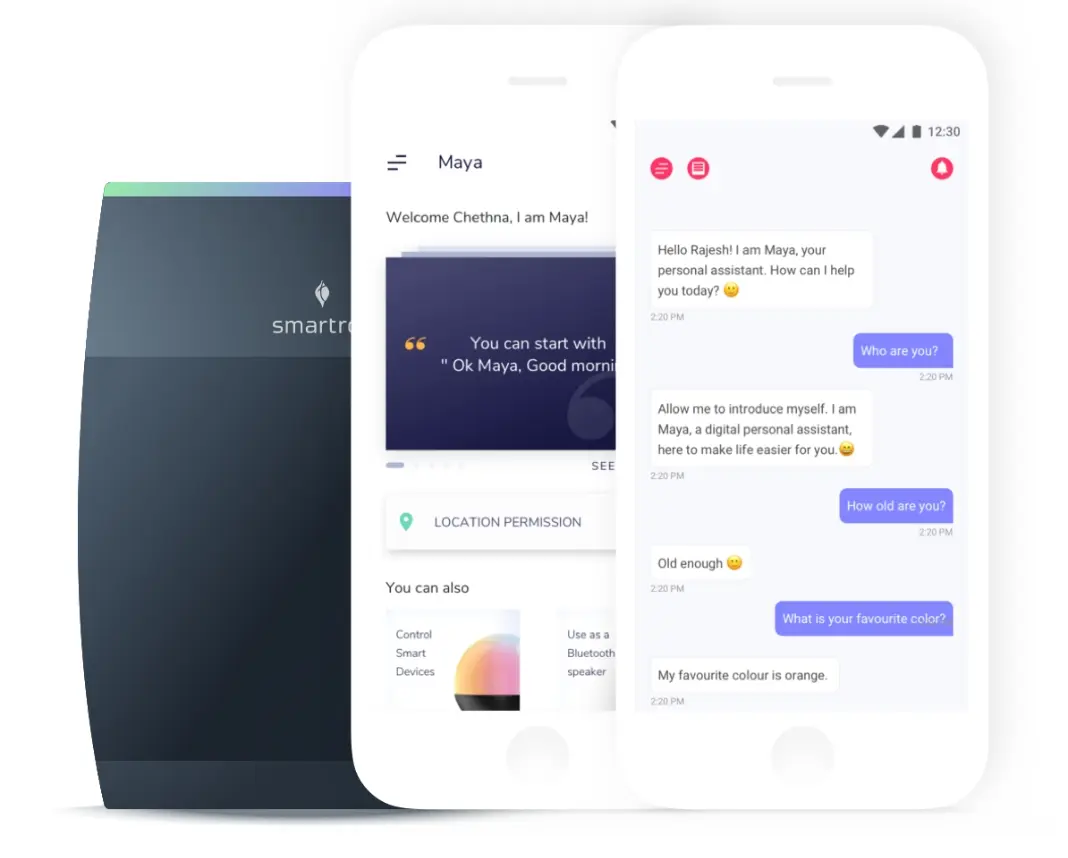

— an AI smart home voice assistant

Summary

Smartron’s Maya was an ambitious attempt to challenge Google Assistant and Amazon Alexa. Collaborating with top experts in NLP, AI, and IoT, I gained deep exposure to voice interaction design, UX, technology, and electronics, significantly expanding my expertise in these areas.

Impact

With extensive research, behavioral data, and regulated testing, we found that

fully humanizing an AI assistant often made users feel like they were speaking

to a robot even more. Through UX testing, we fine-tuned responses to be clear

and understandable, which was highly appreciated during our investor pitch for a

funding round.

However, due to a funding crunch, Maya and its resources were merged with Alexa,

preventing us from achieving real-world impact.

Details

The goal is to develop an Alexa rivaling assistant

I designed voice experiences, chat interfaces, and a companion app in close collaboration with visual designers, ML

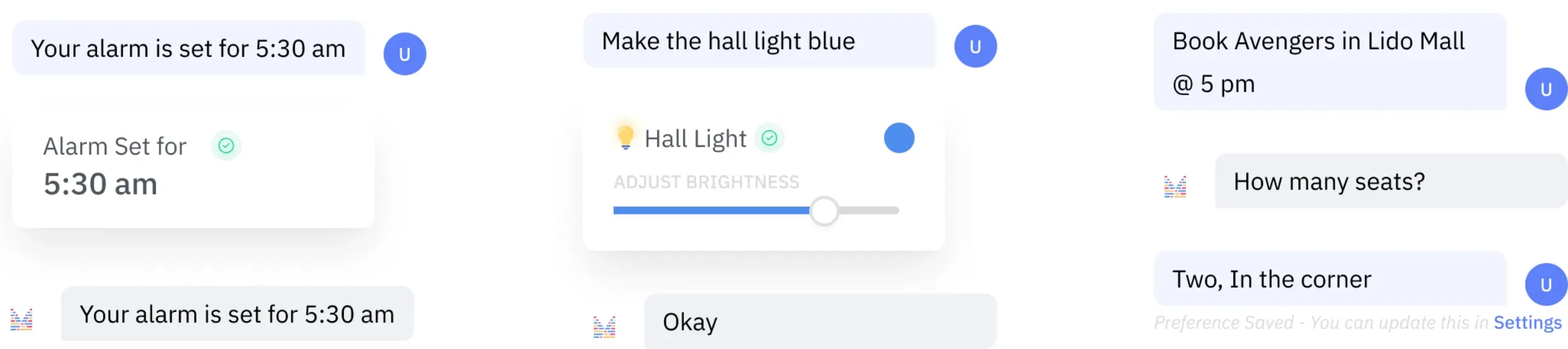

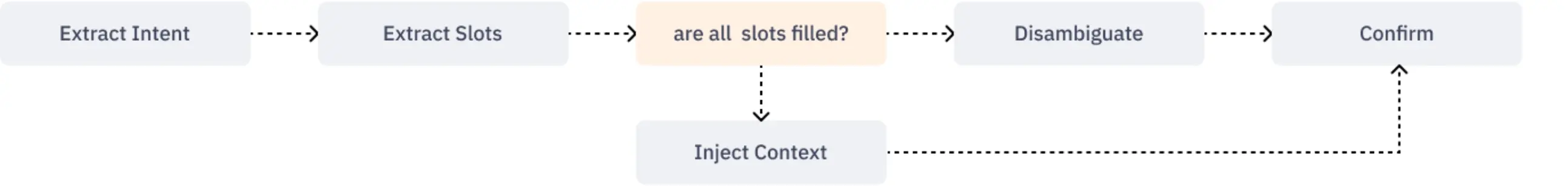

Intents, slots, response templates & disambiguations

Whether in voice responses or chat UI, users seek consistency, as it reduces cognitive load. Our focus was to ensure a seamless and unified experience across platforms, maintaining this consistency throughout the interactions.

We found that people stay in loop - so we let Maya “Assume”

From our research, we found that users prefer ordering the same food, checking the weather at regular times, and listening to the same songs. To enhance user experience, we experimented with personal "Assumptions" in Maya, allowing the assistant to anticipate and fill in details based on user patterns when invoking specific tasks, while still maintaining contextual accuracy.

Even made an accountant mode

The calculator feature presented a unique opportunity for research. Unlike most voice assistants and LLMs that require complete statements for calculations, we designed Maya's calculator to continuously listen for number inputs and provide answers based on user requests within the session. This approach was highly appreciated in 2018, especially when n-gram based NLP was handling the heavy lifting.

We made the fun whisper experience with SSML

SSML (Speech Synthesis Markup Language) enabled us to control speech aspects like pronunciation, volume, and pitch. Using SSML, we created a fun feature where Maya could detect when users whispered and respond in a whispering tone, a playful adaptation introduced before similar features appeared in Alexa. 😊